By Francesco Evangelista, Sam L. Thomas, Alex Matrosov

Software and its supply chain are becoming increasingly complex, with developers relying more and more on third-party frameworks and libraries to ship fast and fulfill business objectives. While the prevalence of such libraries and frameworks is a boon, allowing developers to focus on specific business logic as opposed to “reinventing the wheel,” these dependencies often introduce vulnerabilities into otherwise secure codebases.

This problem is compounded by the advent of AI-driven software development. We have entered a new era of scale for cyber attacks, where low-hanging targets can be compromised essentially just by facing the internet. As LLMs produce code that often depends on outdated library versions, we risk creating unmaintainable and vulnerable software that is still functionally correct. But the risk is bidirectional: the quality of reasoning and logic in AI models has progressed so quickly that they can now independently figure out exploit development based on existing knowledge, a domain previously reserved for human experts. As demonstrated by recent experiments, the only things that matter now are context (domain-specific expertise) and the velocity of access to knowledge regarding new attack classes. This shift makes identifying security issues a vital, time-sensitive part of today’s software development process.

While there is an abundance of tools available for auditing and analyzing source code, when a dependency is shipped as a binary, few such tools exist. More often than not, developers can do little more than trust that the library or framework they’re using is free of vulnerabilities. Most existing tools for checking if binaries contain known vulnerabilities (including most binary SCA solutions) rely on scanning for version strings or simple byte patterns like YARA. Unfortunately, such an approach is ineffective when library maintainers backport fixes without updating version numbers, a scenario frequently observed in open source projects (like Lighttpd). Moreover, even when such approaches successfully identify a library version, they tell us nothing about the reachability of potentially vulnerable code paths. This results in huge numbers of false positives and provides little actionable insight.

When we started Binarly in 2021, we had a distinct goal: to bring code-level detections (think CodeQL or Semgrep) to binaries. We recognized the need to move beyond simple byte patterns toward rules built on semantic meaning and program behavior. FwHunt was our first iteration, created to establish a domain-specific code semantics context for vulnerability detection.

To address the lack of context and visibility in existing tools, we developed VulHunt, a framework for performing automated binary analysis with a focus on identifying vulnerabilities and providing actionable insights, such as reachability properties and annotated code listings.

VulHunt represents the next generation of this approach, designed to double down on deeper semantic context and expand to broader software ecosystems beyond firmware. This is a critical shift for securing the era of AI-driven development. VulHunt is designed for multiple use cases: enabling security researchers to hunt for known and unknown vulnerabilities (identifying new vulnerabilities based on known code primitives), helping both offensive and product security teams verify whether binaries are affected by security issues, and assisting malware analysts in detecting potentially malicious code or backdoors.

At its core, VulHunt includes a dataflow analysis engine for tracing unsafe data paths, intermediate representation (IR) matching for architecture-agnostic analysis, pattern matching over both decompiled code and raw bytes, function signature integration to enhance detection quality and coverage, and type library support to improve explainability and detection reasoning.

Now that this technology has been battle-tested at scale by industry leaders like Dell, Meta, and many others, we are bringing VulHunt to the RE//verse conference.

Existing tools each cover part of the problem, yet none provide a complete solution. Source-code scanners like Semgrep are fast and lightweight, but they are restricted to source code or decompiled code and cannot handle scenarios that require analysis of intermediate representations or binary code. CodeQL excels at complex, dataflow-based analysis, yet it is limited to source code and requires considerable expertise to write expressive queries. Tools like Joern provide powerful graph-based analyses, including taint tracking, but their limited semantic understanding means some context-specific or logic-based vulnerabilities may be missed. YARA can catch known patterns quickly and work directly on binaries, but existing YARA rules are generally insufficient to reliably detect new or slightly modified vulnerabilities, as YARA lacks deep semantic understanding. Even specialized tools like FwHunt are constrained to specific domains such as UEFI firmware, and while versatile, they struggle when code pattern detection is needed—which makes up the majority of detection scenarios. Meanwhile, APIs in reverse engineering tools like IDA Pro or Binary Ninja, while extremely flexible, demand significant manual effort to scale when used to build custom vulnerability detection scripts or tools.

While these solutions provide valuable capabilities, each comes with limitations.

VulHunt stands apart by combining multiple analysis approaches under one framework. It integrates IR, pattern, and dataflow analysis with support for function signatures and type libraries, providing precise annotations while remaining extensible and scalable.

VulHunt is designed with scalability in mind, because of this, hundreds of binaries can be scanned in seconds, and heavier analyses, such as full dataflow tracking, run on-demand, minimising unnecessary computational overhead. This scalability is critical for production environments, where a large set of binaries must be analysed quickly.

It is equally important during the development and testing of VulHunt rules. Refining detection logic often requires running analyses repeatedly, and VulHunt’s fast scanning and on-demand analyses make this process efficient, enabling rapid iteration and feedback.

The following table shows the typical scanning performance of VulHunt on a variety of Docker container images, including the number of files analyzed (hereafter referred to as components), their sizes, and the average scanning time. These results were obtained using a set of 25 rules targeting common libraries such as libexpat, libssh, libcurl, and others. The scan was performed on a machine with 64GB of RAM and a 14-core CPU.

* Latest version at the time of writing; amd64 targets

** Average over 10 runs

Another key goal of VulHunt is to make vulnerability findings actionable and understandable. To do this, VulHunt provides precise annotations that indicate the exact location of a vulnerability and contextual information about the affected functions and dataflows.

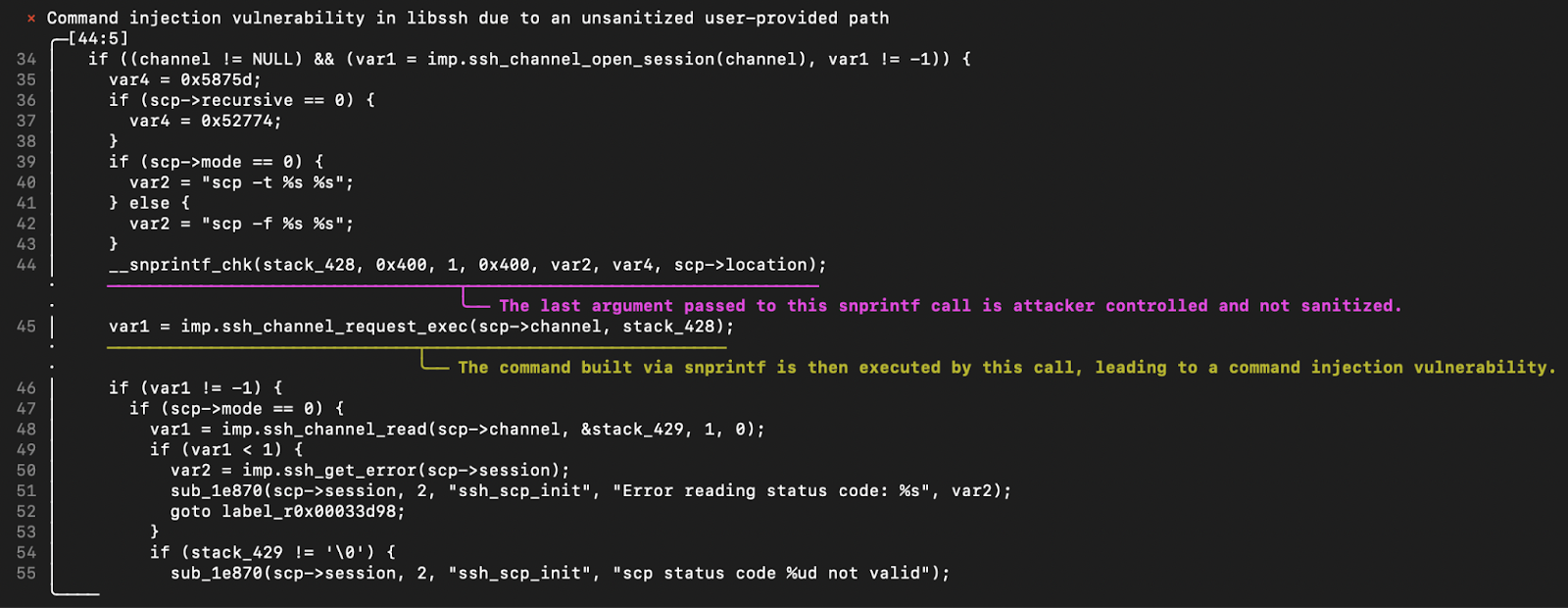

For example, the screenshot below shows a decompiled function with annotations highlighting a detected vulnerability:

In production, these annotations help security teams quickly triage issues and make informed decisions, reducing the time between detection and mitigation. During the development of VulHunt rules, clear explanations allow security engineers to understand why a detection was triggered and refine rules effectively, accelerating iteration and improving accuracy.

We created VulHunt to address a long-standing issue in binary vulnerability analysis: the high false-positive rate of tools that rely solely on version or library identification. These tools often flag components as vulnerable without explaining why, leaving security teams with the burden of manual triage.

VulHunt tackles this in two ways:

Beyond reducing false positives, VulHunt also helps customers understand the spread and impact of vulnerabilities that may not yet be public. It can detect variants, measure propagation across products, and highlight what needs patching.

For end users, VulHunt can discover unknown potential vulnerabilities or confirm whether specific targets are affected by known issues.

One crucial goal for VulHunt was ease of use. When working with binaries, detecting certain vulnerabilities can be difficult, especially when variants exist, functions are inlined, or the binary is stripped. To address this, VulHunt provides two usage modes:

VulHunt works by executing what we call VulHunt rules–Lua scripts that interact with the VulHunt engine. These rules let users write detection logics for specific vulnerabilities or variants. To streamline rule development, we provide an SDK that enables interactive usage and fast feedback loop, making it easy to write, test, and refine rules.

While rules are useful for detecting specific vulnerabilities or their variants, there is another use of VulHunt: vulnerability triaging and hunting. To make this possible, VulHunt exposes a set of primitives that can be used by Large Language Models (LLMs) to automatically analyse potentially vulnerable binaries and look for vulnerable patterns. This is especially useful when searching for a class of vulnerabilities or when a new vulnerability appears–the LLM can analyse the binary through these primitives to determine whether it contains the issue or not.

In the following demo, we demonstrate how VulHunt can quickly analyse a binary and detect real-world vulnerabilities.

While this demo highlights some core features, it represents just a glimpse of what VulHunt can do.

In this first part of the blog, we wanted to give a high-level overview of VulHunt, including the philosophy behind it, why it was created, what it is capable of, and how it compares with other existing industry solutions. We also discussed how VulHunt addresses the lack of visibility in legacy black-box solutions by bringing transparency to the software supply chain, and how it shifts the focus toward actionable risk prioritization.

In the second part of this blog, we’ll take a technical deep dive into VulHunt, exploring its architecture, core components, and capabilities. We’ll walk through how rule development works, what agentic usage looks like in practice, and how these elements come together to enable powerful automated security analysis. Following this technical overview, the series will continue with additional posts that demonstrate how to use VulHunt to identify real-world vulnerabilities using different techniques, featuring practical workflows, hands-on examples, and best practices for getting the most out of the framework.

Looking ahead, we plan to release the primitives for agentic usage around the conference time, alongside a second release of the VulHunt engine. This will enable users and developers to start leveraging AI-first, LLM-driven vulnerability analysis directly. Additionally, we’re excited to announce that VulHunt will be presented at RE//verse in March 2026, providing an opportunity to showcase its capabilities to the security research community.